Enterprise Maturity Assessment Tool .

A data-driven platform to assess, track, and improve agility across enterprise teams.

case study

engagement type

Individual Contributor

role

Senior UX Designer

Duration

2.5 Years

Adobe XD, Miro, Optimal Workshop

tools

Project Overview

Problem Statement

Organisations undergoing digital transformation often lack a scalable and consistent way to assess team maturity and identify problem areas across Agile, DevOps, QE, and SRE practices. Existing approaches are largely manual, time-consuming, and fragmented, making it difficult to gather meaningful insights, compare progress, and provide timely, actionable recommendations to guide transformation efforts.

Objective

Create a scalable and consistent way for organisations to assess team maturity, surface problem areas, and generate actionable insights that support informed decision-making throughout their digital transformation journey.

Target Audience

Enterprise leaders, transformation teams, agile coaches, and delivery managers responsible for driving and monitoring organisational agility.

My Contributions

UX Research & Strategy

Stakeholder Engagement

Wireframing & Prototyping

Design System Setup

Usability Testing

Information Architecture & Experience Design

Feature Prioritisation & Task Planning

Research & Discovery

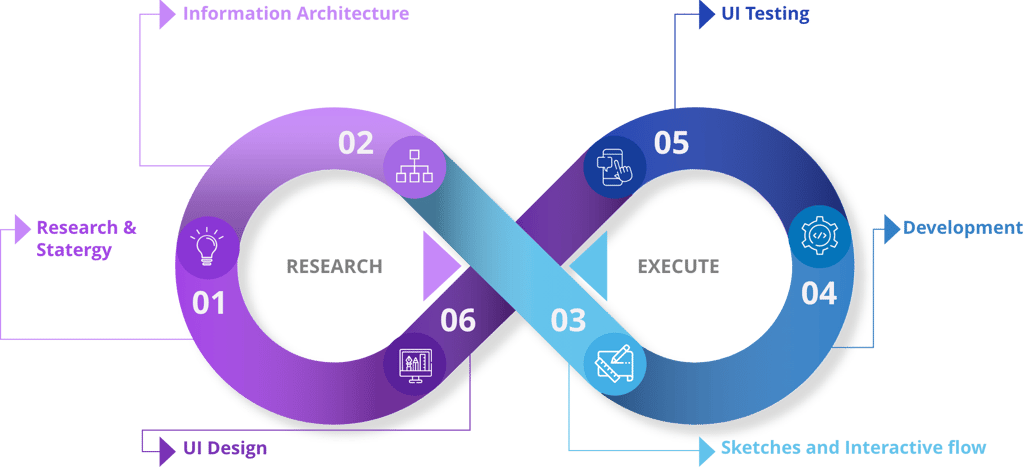

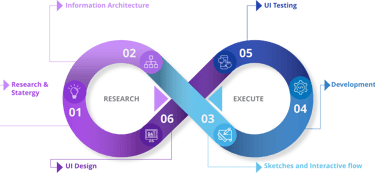

Our UX Approach

It involves putting users at the center of the design and development process, and establishing an iterative cycle of research, design and evaluation. From sales and marketing to customer adoption and connection as a result, we execute comprehensively with essential research and design process that ensure we deliver higher ROI with amazing experiences.

Our guided principles to build brilliant user experiences:

Purposeful design

Products should be aimed at achieving user goals. A good design is not equal to a good user experience. It is a catalyst.

Iterative

User evolves, so does their expectations from the products. Look for real user feedback to continuously evolve and iterate their user experience.

Metrics driven

Trust data. Incorporate feedback and build with speed to reduce risk.

Inclusive

Consistent experience for diverse users. Build for a global audience.

Business Goals

The initial phase of the project entailed evaluating the client's existing processes, identifying business goals, UX opportunities, efficiency gaps, and so on. In order to achieve this we conducted deep dive discovery session with our business stakeholders.

Some of the business goals included :

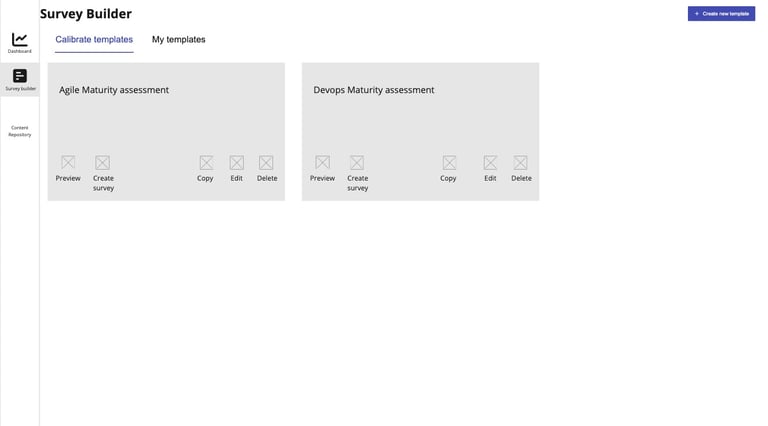

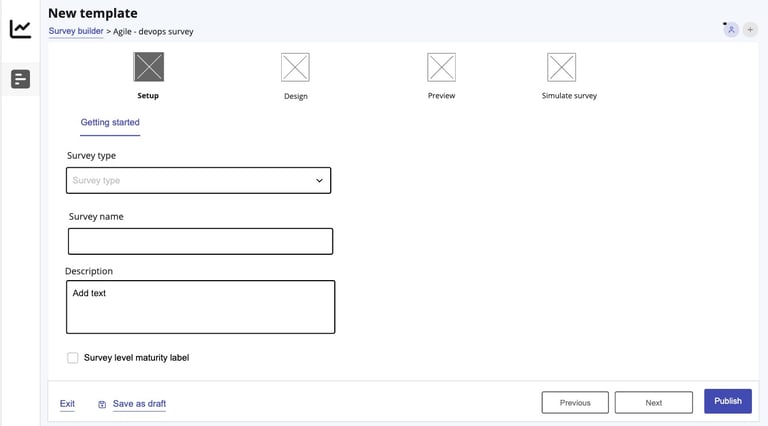

Easy and efficient way to create surveys and ability to pick it up from an available knowledge base.

Productive facilitation of the surveys to understand what tools and processes the client is currently use it.

Efficient way to draw inferences from the answers so that one can give better recommendations.

Have an amalgamation of the subjective response to an objective metric to see where the company stands in terms of Agility, devops SRE etc.

The recommendations and reports delivered to the company should generate business for our customer.

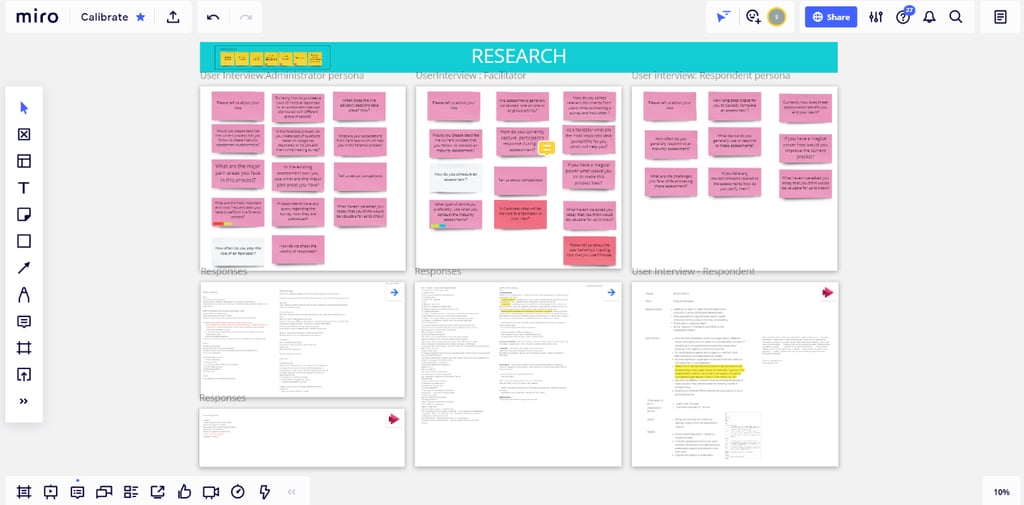

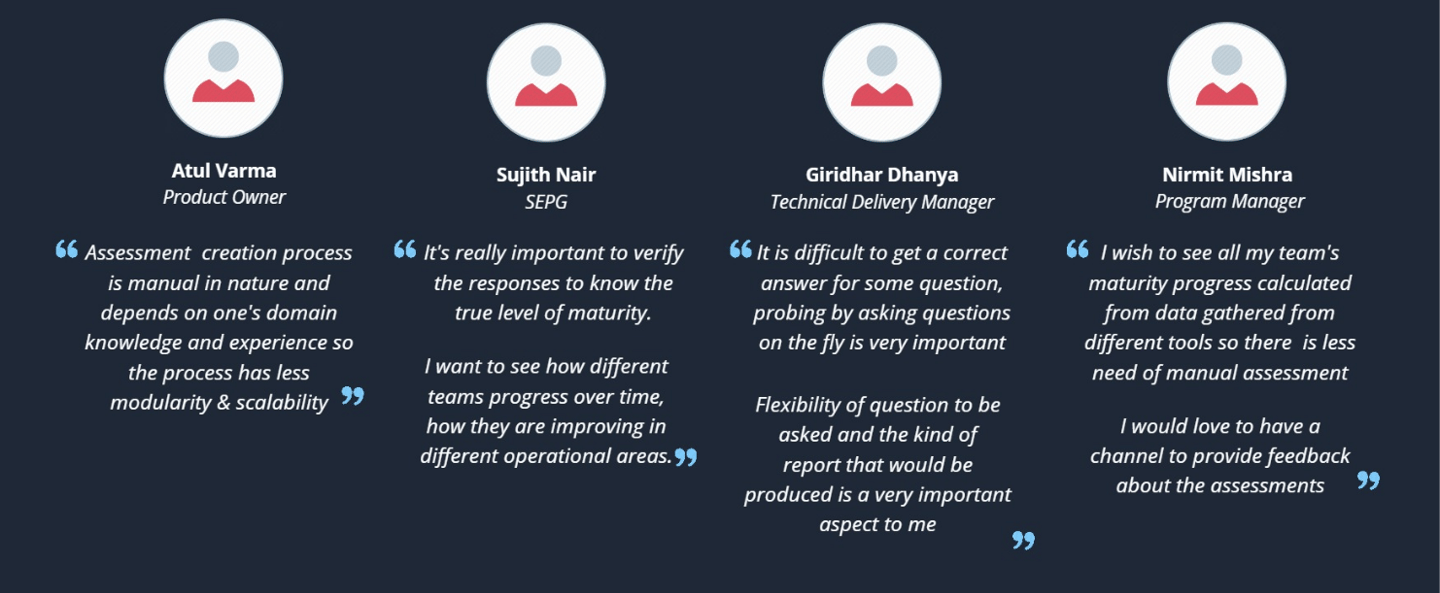

User Collaboration & Discovery

With a blend of in-person and remote research methods we tried to execute the user research process. Remote tools like Miro played a significant role for user testing and collaboration during this pandemic and travel restrictions.

Personas

Key Findings & Recommendations

We heard a lot...

08

Focus Group Discussion

06

User Interviews

15

Hours of Interviews

03

Personas Created

Administrator Persona

An enterprise transformation specialist responsible for designing agility assessments, configuring maturity models, and generating insights to guide organisations across Agile, DevOps, QE, and SRE practices.

Role & context

core responsibilities

Design and manage maturity assessments across teams and practice areas

Define scoring models and roadmaps to support organisational transformation

Analyse responses and translate data into actionable recommendations

Maintain consistency and quality across multiple assessments and clients

key needs

A scalable, modular way to create and manage assessments

Centralised access to responses, metrics, and maturity reports

Consistent, reusable templates with version control

Clear visualisations to communicate insights and progress

pain points

Assessment creation and management is largely manual and time-consuming

Heavy reliance on spreadsheets leads to inconsistency and data fragmentation

Tracking and analysing responses across teams is cumbersome

Generating meaningful reports and roadmaps requires significant manual effort

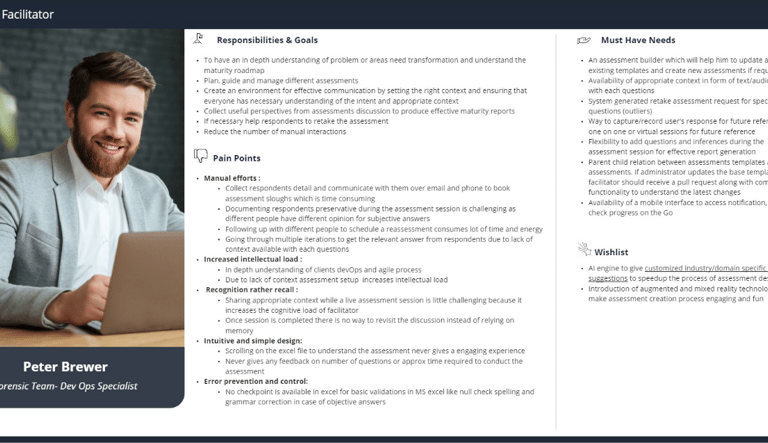

Facilitator Persona

Role & context

A DevOps or Agile specialist responsible for facilitating maturity assessments with enterprise teams, guiding discussions, collecting contextual insights, and ensuring assessments are completed accurately and efficiently.

core responsibilities

Facilitate assessment sessions and guide teams through questions

Provide context and clarification to ensure accurate responses

Capture qualitative insights and inferences during discussions

Coordinate assessment schedules and follow-ups

key needs

Guided assessment flows that reduce cognitive load

Contextual prompts to explain questions during sessions

Ability to capture notes and insights alongside responses

Visibility into assessment progress and participation

pain points

High manual effort in scheduling and follow-ups

Cognitive overload when interpreting subjective responses

Reliance on memory or scattered notes after sessions

Fragmented workflows across emails and spreadsheets

Respondent Persona (Secondary)

Respondents are program or delivery managers who take part in agility assessments to reflect on their team’s current practices and understand progress over time. They need a straightforward, well-contextualised assessment experience that is quick to complete and clearly explains how their input will be used. Manual processes, unclear questions, and limited feedback reduce engagement and make it harder for them to see value in participating.

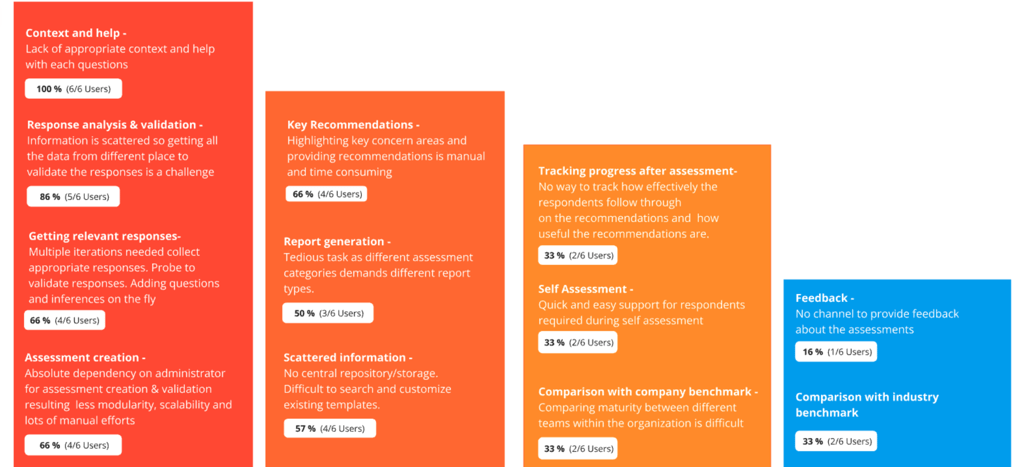

While the research surfaced several findings across roles, the highlights below reflect a few key observations that most influenced design decisions.

Manual and fragmented assessment creation made it difficult to scale and maintain consistency across teams.

Limited visibility into progress and recommendations increased reliance on manual tracking.

Lack of context within questions increased cognitive load during assessment sessions.

Multiple iterations were required to validate responses, slowing down facilitation.

Unclear questions and lack of feedback reduced response quality and engagement.

No easy way to track progress or compare results over time limited perceived value.

Key Observations

UX Direction

Introduced reusable templates and centralised assessment management.

Enabled automated analysis and dashboards to track maturity and follow-through.

Added contextual guidance and in-session support.

Supported response validation and reassessment within the platform.

Designed a simple, well-contextualised assessment experience.

Visualised progress and benchmark results clearly.

UX Strategy

Solution Development

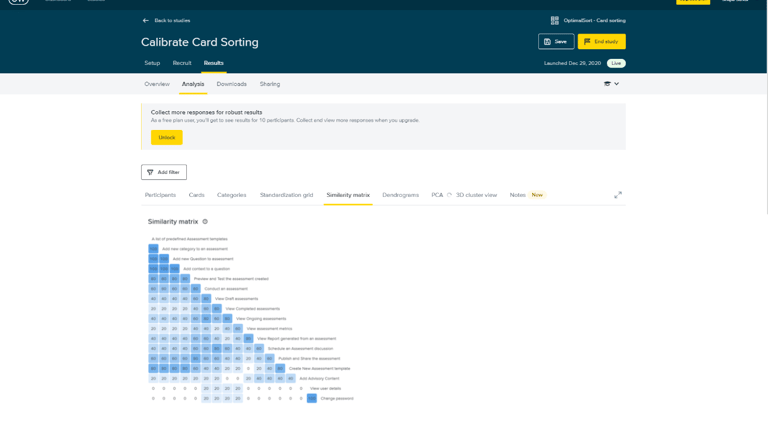

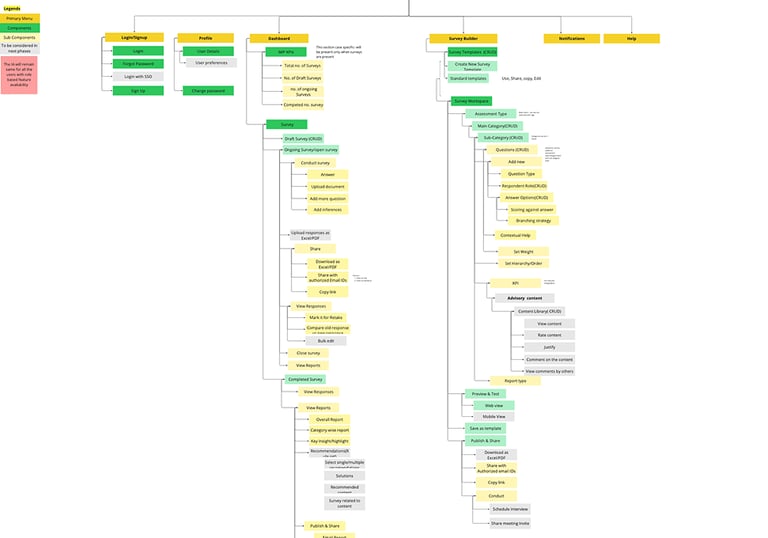

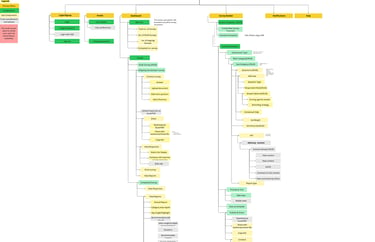

Information Architecture

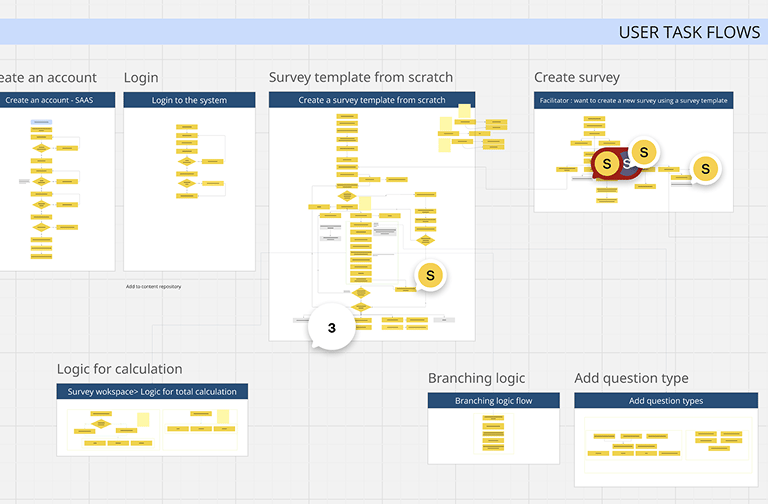

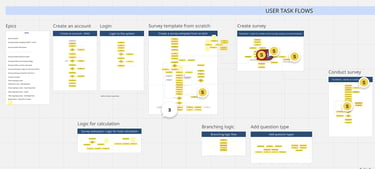

Task Flows

Wireframes

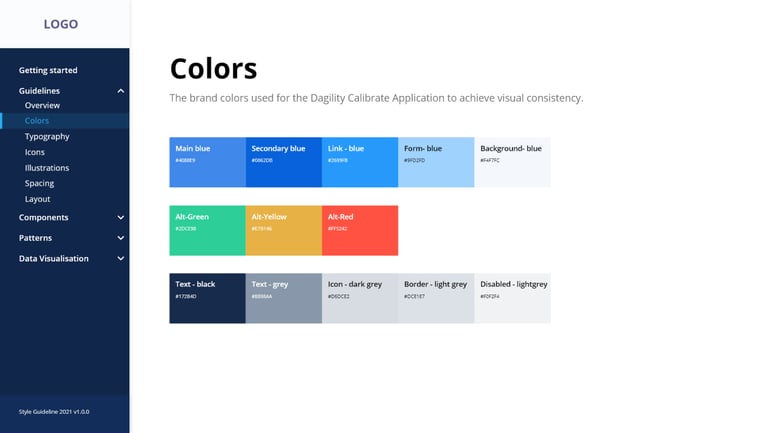

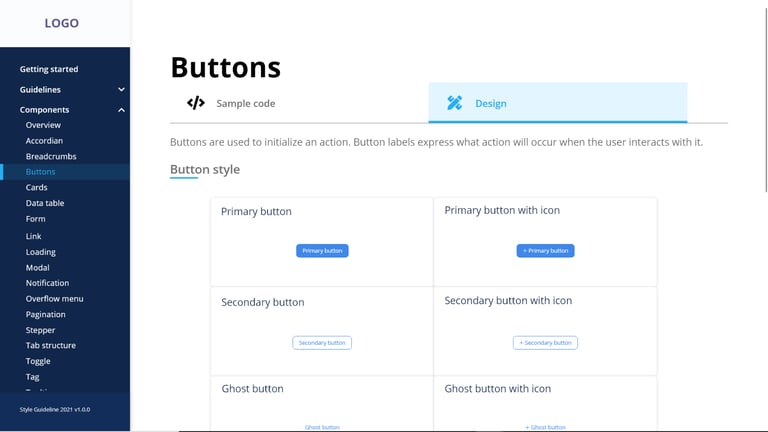

Design System Foundations

Solution development followed an iterative, agile approach, with design decisions evolving through continuous collaboration, validation, and refinement. Rather than documenting every intermediate state, the focus was on defining clear system structure, role-based flows, and scalable patterns that could support enterprise-wide adoption.

Our Style guide is a part of our design language. When used correctly they improve communication, re-enforce out brand, and enhance the user experience.

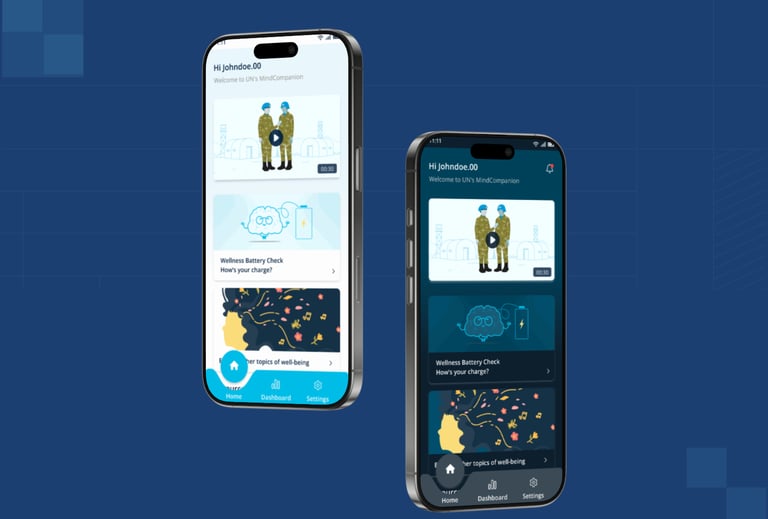

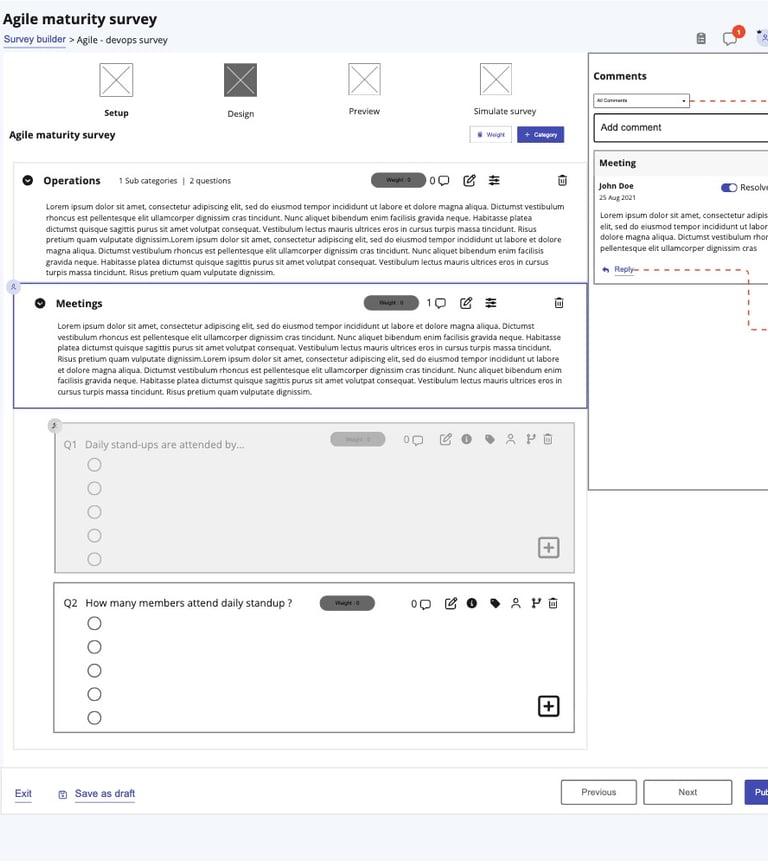

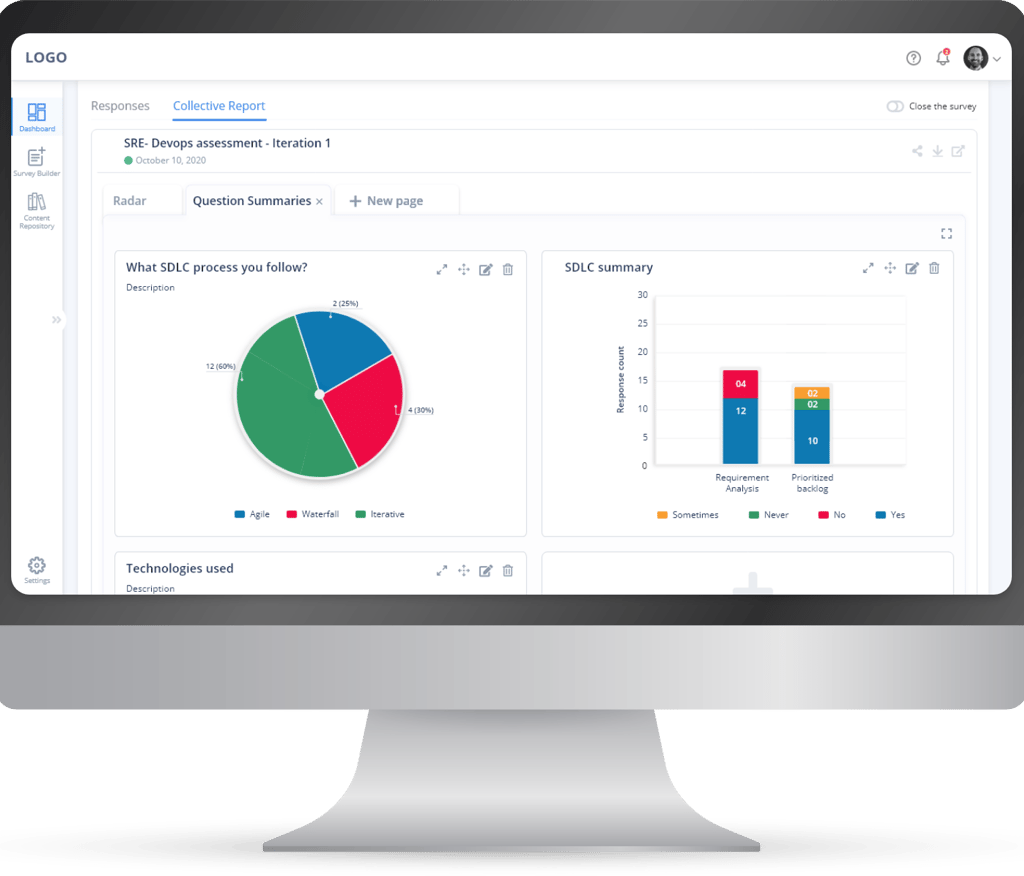

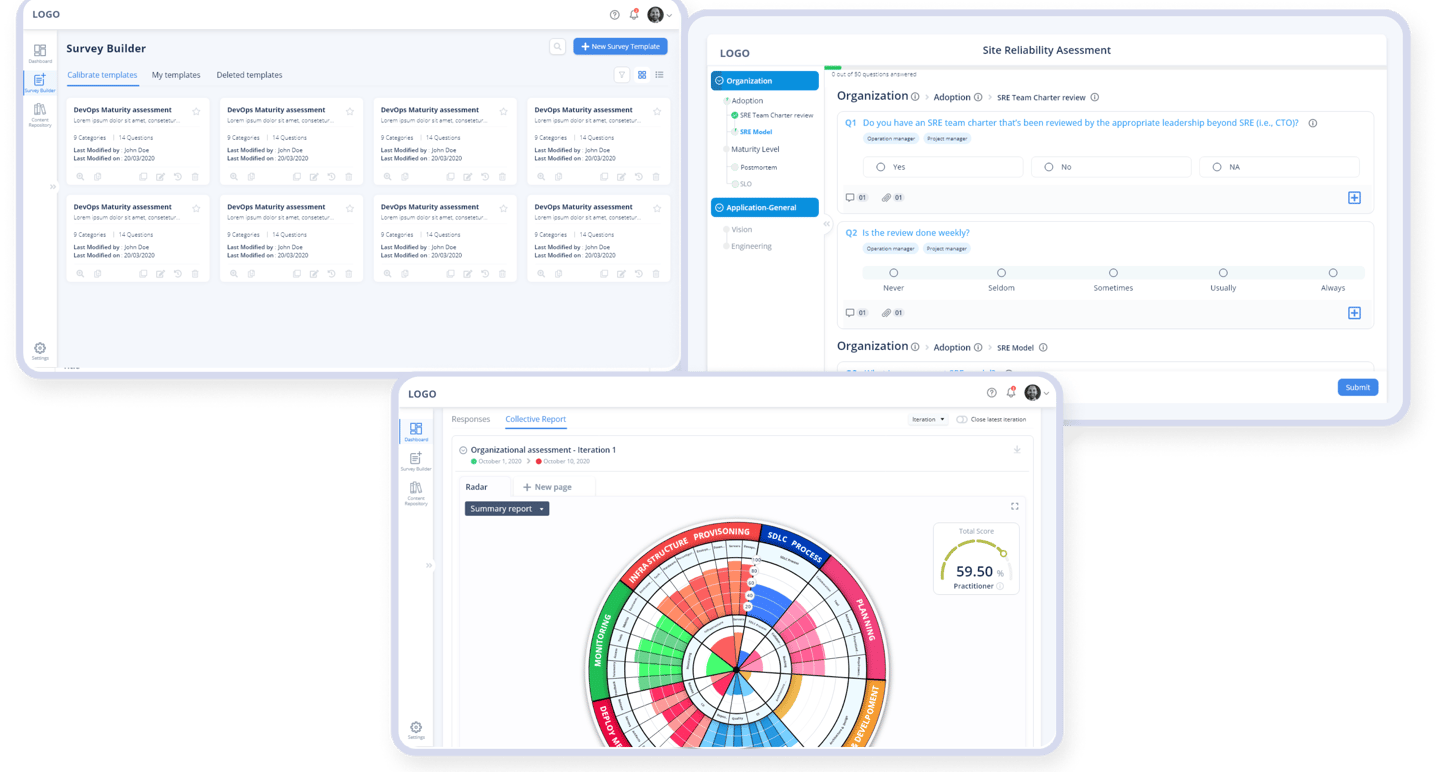

Final Mockup

An Intelligent assessment platform to collect and analyze data which will help enterprise to improve value, quality and process efficiency. More accurate, elaborated and interactive assessment reports by integrating with different inhouse tools .

KPI driven design empowering the user to execute the workflows in a far more efficiency and with extremely lower learning curve.

Modernized components for improved usability.

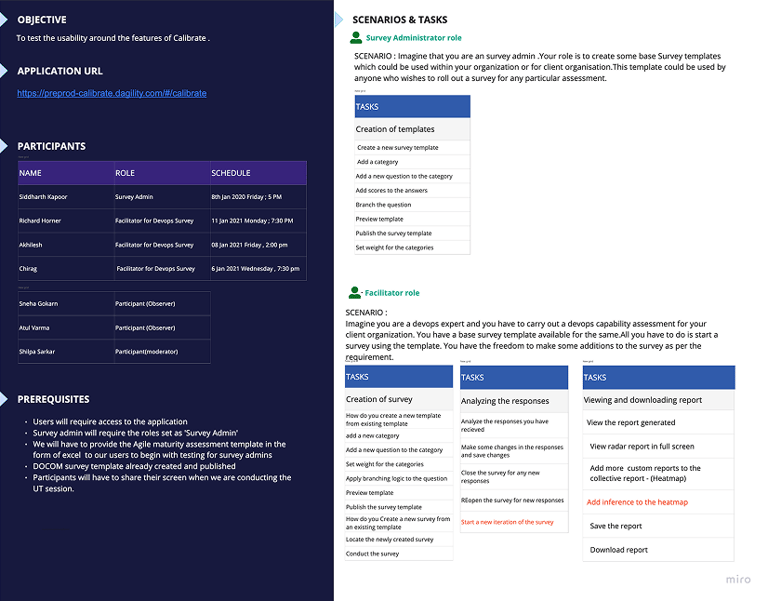

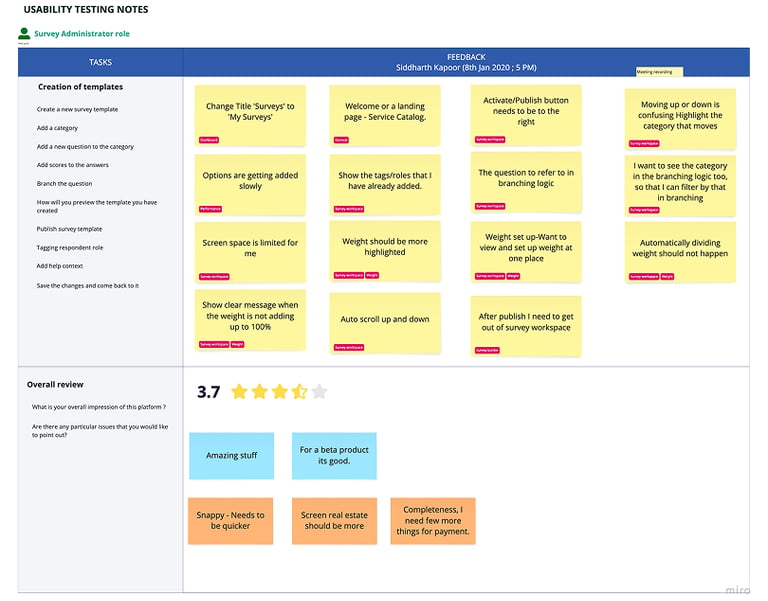

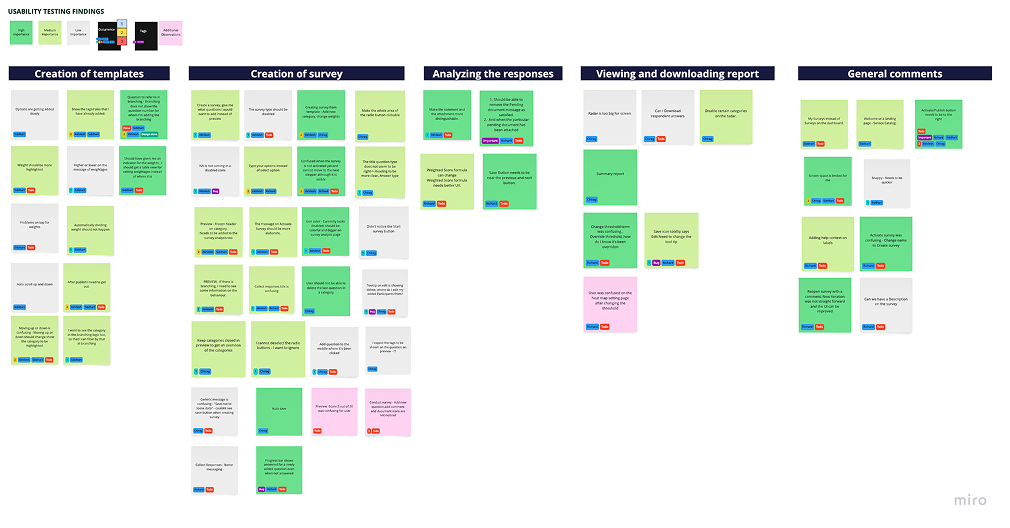

Moderated Usability Testing

Usability testing was conducted iteratively as moderated, task-based sessions mapped to real enterprise scenarios across administrator, facilitator, and respondent roles. Rather than evaluating isolated screens, sessions focused on how users completed end-to-end assessment workflows under realistic conditions.

Testing Approach

Defined role-specific scenarios and tasks based on key workflows such as assessment creation, facilitation, response analysis, and reporting.

Conducted moderated sessions with think-aloud, capturing behavioural cues, hesitation points, and decision breakdowns.

Logged observations task-by-task to understand where users struggled, adapted, or relied on workarounds.

Key Areas Evaluated

Cognitive load during complex actions such as template creation, branching, and weighting.

Clarity of system feedback, validations, and progress indicators during multi-step tasks.

Effectiveness of information structure when analysing responses and interpreting reports.

Usability Testing & Iteration

Feedback & Iterations

Synthesised raw observations into task-level findings, clustered by workflow stage.

Identified recurring friction patterns across users and roles using affinity mapping.

Prioritised design changes based on frequency, severity, and downstream impact, informing refinements to navigation, guidance, and system feedback.

Key Reflections

Challenges Faced

Designing for multiple roles

Balancing the needs of administrators, facilitators, and respondents within a single enterprise system required careful trade-offs.

Reducing manual effort at scale

Replacing Excel-based workflows while retaining flexibility for custom assessments was a key challenge.

Making complex data usable

Presenting maturity insights and recommendations clearly without overwhelming users required strong prioritisation.

Learnings

Structure before polish

Clear system structure and flows had more impact than visual refinement in an enterprise context.

Context reduces cognitive load

In-flow guidance and feedback improved clarity, confidence, and task completion.

Synthesis drives impact

Early clustering of findings helped prioritise changes with the highest value.

© 2026. All rights reserved.